SEO is a well-renowned term, but not many know what technical SEO exactly is and how it’s relevant in 2023.

First off, let’s understand the basics.

SEO (Search Engine Optimization) is a broader term for the various strategies and techniques to improve website visibility and ranking in search engine results.

Technical SEO is one aspect of SEO that focuses specifically on technical elements, like website speed and responsiveness, architecture, structured data, and mobile-friendliness. It ensures that search engines can effectively crawl, interpret, and index a website’s content.

With search engines continuing to prioritize user experience and website performance, technical SEO is as relevant in 2023 as ever. It helps meet the increasing demands of fast-loading, mobile-friendly websites. And as websites become more complex and dynamic, technical SEO Audit helps address issues like crawl ability, duplicate content, and URL structures, ensuring that search engines can effectively understand and index website content.

Simply put, technical SEO empowers you to enhance your online presence, attract organic traffic, and provide a seamless user experience in the evolving digital landscape.

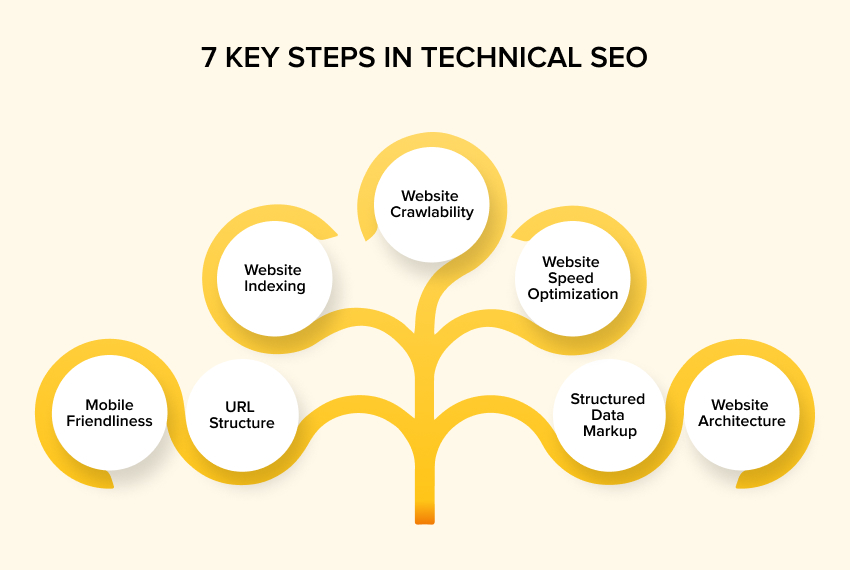

So, how do you begin with good technical SEO? While the specific steps would vary depending on your website and requirements, here is an overview of the key steps involved in technical SEO:

7 KEY STEPS IN TECHNICAL SEO

- Website Crawlability: Keep your website accessible to search engine crawlers.

- Website Indexing: Confirm that search engines are indexing your website pages properly.

- Website Speed Optimization: Optimize your website’s loading speed.

- Mobile-Friendliness: Ensure your website is mobile-friendly and responsive, adapting well to different screen sizes and devices.

- URL Structure: Keep clean, descriptive, and SEO-friendly URLs that reflect the content hierarchy and are easy for users and search engines to understand.

- Structured Data Markup: Provide additional context to search engines about your website’s content.

- Website Architecture: Organize your website’s structure logically and hierarchically.

Now that we have an overview let’s delve deeper and uncover the importance of each step, the strategies to implement it, and the tools you can use.

WEBSITE SPEED AND RESPONSIVENESS

Website speed measures how quickly your website’s pages load and display content to the users. Responsiveness, on the other hand, is your website’s ability to adapt across different devices and screen sizes.

Why is it important?

Search engines like Google consider page speed for ranking. That’s why faster-loading websites are more likely to rank higher in search results. Conversely, a slow-loading website harms the user experience, causing higher bounce rates and lower engagement.

Mobile responsiveness is equally important as search engines prioritize mobile-friendly websites for mobile search results. And with the number of mobile users on an all-time rise, having a responsive website ensures seamless user experience across devices, improving rankings and user satisfaction.

What are the best strategies for improving site speed and responsiveness?

- Compressing image sizes without compromising on quality.

- Minifying CSS and JavaScript files to reduce their file size and removing unnecessary codes or whitespace.

- Using browser caching to store static resources locally on a user’s device reduces load time for future visits.

- Implementing content delivery networks (CDNs) to distribute your website content fast across various servers worldwide.

- Upgrading your hosting plan or server to improve performance and using caching plugins or CDNs designed exclusively for dynamic content.

What tools can you use to measure site speed and responsiveness?

- Google’s PageSpeed Insights: for insights on both mobile and desktop performance, along with suggestions for improvement.

- GTmetrix: for analyzing website speed with actionable recommendations.

- Pingdom Website Speed Test: for a detailed breakdown of your site’s load time and performance bottlenecks.

- WebPagetest: for comprehensive performance analysis, including waterfall charts and a filmstrip view of page loading.

- Lighthouse: integrated into Google Chrome DevTools, for audits and reports on performance, accessibility, SEO, and more.

WEBSITE ARCHITECTURE AND URL

Also known as information architecture, website or site architecture is the organization and structure of a website’s content and pages. It involves arranging information, navigation systems, and the overall hierarchy of the pages and sections.

As you may already know, a URL (Uniform Resource Locator) is a string of characters that provides the location of a specific resource on the internet. It serves as a unique identifier for a web page or file and typically consists of the scheme or protocol (e.g., HTTP, HTTPS), the domain name, and the path.

Why is it important?

Optimizing site architecture and URL structure allows users to navigate your website easily, helping search engines understand your content better. It can also enhance your user experience, facilitate search engine crawling and indexing, and improve overall website performance and search engine visibility.

What are the best strategies for optimizing site architecture and URL structure?

- Designing your website’s architecture logically and intuitively by grouping related content into categories and subcategories.

- Creating URLs that are concise, descriptive, and representative of the content on the page with relevant keywords. Avoiding numbers or cryptic characters, as it can confuse the users and search engines.

- Using hyphens for word separation instead of underscores or spaces as they are recognized as word separators by search engines.

- Avoiding keyword stuffing and focusing on creating user-friendly URLs with a natural flow.

- Minimizing URL parameters, especially if they generate dynamic URLs. The long and complex ones are difficult to crawl and index for search engines.

- Implementing proper canonical tags and redirects to handle duplicate content and URL variations.

- Using internal linking strategically for strong interconnectedness between pages.

What tools can you use to evaluate site architecture and URL structure?

- Screaming Frog: for analyzing your website’s structure and insights into the URLs, internal linking, and overall site architecture.

- Google Search Console: for valuable information about your website’s performance in Google search results and data on indexed pages, crawl errors, and URL parameters.

- Google Analytics: for insights into how users navigate your site and data on page views, bounce rates, and user flow.

- Moz Pro: for site audits and crawl diagnostics with analysis of your website’s structure. You can identify issues related to URL structure, optimization recommendations, and insights into internal linking.

- SEMrush: for site audit with site’s structure, URL issues, internal linking analysis, and optimization suggestions.

ROBOT.TXT FILE AND XML SITEMAP

The robots.txt file, a text file placed in the website’s root directory, instructs search engine crawlers which pages or files they can access and crawl. In addition, the file contains directives such as “allow” and “disallow” to control crawler behavior.

An XML sitemap lists all the URLs of a website that should be indexed by search engines, serving as a roadmap for search engine crawlers and helping them understand the structure and content of the website.

Why is it important?

Robots.txt may not be a foolproof security measure, but it helps guide search engines and prevents them from crawling sensitive or irrelevant pages like admin sections or duplicate content. On the other hand, XML sitemaps provide additional metadata about each URL, like the last modification date and priority, aiding search engines in crawling and indexing the site efficiently.

What are the best strategies for optimizing the robots.txt file and XML sitemap?

- Ensuring the correct placement of your robots.txt file in the website’s root directory and using the “disallow” directive to block access for irrelevant or sensitive pages.

- Regularly reviewing and updating the file to reflect changes in page structure or content.

- Creating an XML sitemap that includes all relevant and important URLs of your website and updating it when new pages are added, or existing ones are modified.

- Ensuring that the sitemap is error-free and adheres to XML sitemap protocol standards.

- Submitting your XML sitemap to search engines like Google Search Console or Bing Webmaster Tools to notify them about its existence and improve the likelihood of proper indexing.

- Regularly monitoring and updating for prompt resolutions in case of errors reported by search engine tools.

What tools can you use to check your robots.txt file and XML sitemap?

- Google Search Console: for testing and validating your robots.txt file, insights into how Googlebot interprets your directives, and Sitemaps reports to submit and monitor your XML sitemap.

- Bing Webmaster Tools: for similar testing and validation of your robots.txt file along with a Sitemaps feature for submitting and monitoring your XML sitemap for Bing’s search engine.

- Screaming Frog: to identify any issues and insights into the structure and URLs included in the XML sitemap.

STRUCTURED DATA AND SCHEMA MARKUP

The Structured data and schema markup provide additional context and meaning to your web page content. Structured data is a standardized format for organizing and annotating data, making it easier for search engines to understand. And schema markup is a specific implementation of that structured data.

Why is it important?

Structured data and schema markup can provide search engines with clear and structured information about your webpage’s content. This information, in turn, can improve your website’s visibility, click-through rates, and user experience with search engine results.

What are the best strategies for implementing structured data and schema markup?

- Identifying the key elements and entities on your website that could benefit from structured data and then determining the most relevant schema types for each.

- Selecting the appropriate schema types that best describe your content, referring to the documentation and guidelines provided by Schema.org for accurate implementation.

- Implementing the schema markup using HTML tags or JSON-LD (JavaScript Object Notation for Linked Data) and placing it on the relevant sections of your webpages like the title, description, author, date, product details, and reviews with correct structure and adherence to guidelines.

- Using structured data testing tools to validate and test your implementation ensuring that search engines properly implement and recognize the schema markup.

- Regularly monitoring your structured data implementation, especially with changes or additions, and keeping up with updates in the schema guidelines for accuracy.

What tools can you use for checking structured data and schema markup?

- Google Structured Data Testing Tool: for testing and validating your structured data markup with detailed reports.

- Google Rich Results Test: for testing and previewing how your structured data markup appears in Google’s search results with issues highlighted.

- Bing Markup Validator: for testing and validating your structured data markup for Bing’s search engine and identifying errors.

IN CONCLUSION

Undoubtedly, technical SEO plays a critical role in website visibility and user experience. With mobile optimization as a technical SEO practice, you can tap into the relevant audience pool.

Additionally, optimizing site speed and responsiveness, improving site architecture and URL structure, utilizing structured data and schema markup, and addressing crawl ability and indexability issues, can enhance your chances of ranking well in search engine results.

Overall, technical SEO forms a strong foundation for effective SEO strategies, maximizing organic search visibility and driving relevant organic traffic to a website. It is, therefore, vital for succeeding in 2023’s increasingly competitive digital landscape.

FAQs

Technical SEO is to optimizing a website’s technical elements to improve visibility and performance in search engine rankings. It focuses on factors like site speed, site architecture, URL structure, crawl ability, indexability, and mobile responsiveness. Technical SEO ensures that search engines can effectively crawl, index, and understand your website’s content. With the best practices of technical SEO, you can enhance your website’s visibility in search engine results, increase organic traffic, and improve user experience.

Common technical SEO issues like slow site speed, improper indexing of pages, duplicate content, broken links, improper redirects, unoptimized URL structure, missing XML sitemaps, issues with robots.txt files, and poor mobile responsiveness can impact your website’s performance. Ensure regular website audits for these issues and address them for optimal technical SEO performance.

With the increase in mobile usage for internet browsing, improving mobile responsiveness is vital for technical SEO. To improve your website’s mobile responsiveness, first, ensure your website has a responsive design that adapts to different screen sizes and resolutions. Next, optimize images and media for mobile viewing, make content easily readable on smaller screens, and ensure fast loading times on mobile devices. Finally, conduct mobile testing to identify and fix any issues that may hamper the user experience on mobile devices.

Schema markup is a structured data vocabulary used to interpret and provide additional context to webpage content. It helps search engines understand the purpose of the content better. Implementing schema markup can enhance your website’s visibility in search engine results through rich snippets and other enhanced search features. It also allows you to provide detailed information about your content, such as reviews, ratings, prices, events, and more, making your website more attractive and informative to users.

To optimize your website’s URLs, ensure they are concise, descriptive, and rich with keywords. Use hyphens to separate words instead of underscores or spaces. Avoid using irrelevant numbers or special characters. Implement proper URL redirects to handle changes or variations. Keep your URLs organized and logical, reflecting the structure and hierarchy of your website’s content. Optimized URLs not only help search engines understand your content better but also provide a user-friendly experience. Additionally, they can improve click-through rates in search results.